For over a century, scientists have chipped away at the mystery of consciousness. We now understand how the brain integrates sensory input, directs attention, sleeps, remembers, makes decisions, and reports internal states. We can map networks, perturb them, read out signals and even induce sensations or memories. Yet the “hard problem,” as philosopher David Chalmers famously called it, endures: how do physical processes in the brain give rise to subjective experience?

As artificial intelligence grows ever more sophisticated and neurotechnology begins to interface directly with the brain, the question is no longer academic. Understanding consciousness has become one of the defining scientific and ethical challenges of our time - one that could transform medicine, law, and technology itself. Cleeremans, Mudrik, and Seth’s 2025 Frontiers in Science article sketches a roadmap for the field’s next phase: one grounded in theory, collaboration, and technology capable of exploring consciousness from the inside out.

For decades, neuroscience has pursued the neural correlates of consciousness - the brain activity patterns that accompany awareness. The authors of the Frontiers article argue that the field is ready to move beyond correlation toward testable theories explaining why consciousness happens. Competing frameworks dominate current debates.

The Global Workspace Theory sees consciousness as the brain’s information “broadcast” hub, integrating and distributing signals across specialized modules. The Higher-Order Thought Theory proposes that we become conscious of an experience when the brain represents it to itself. The Integrated Information Theory (IIT) posits that consciousness reflects the degree of causal integration within a system, while Predictive Processing portrays perception as the brain’s best guess - a controlled hallucination constantly updated by sensory feedback.

Each model captures part of the truth, yet none has achieved consensus. What’s shifting now is the realization that empirical competition - testing, falsifying, and comparing theories - may finally yield convergence. Major international collaborations like Cogitate and INTREPID, backed by the Templeton World Charity Foundation, are setting the stage for the first large-scale, theory-driven tests of consciousness.

Historically, the science of consciousness has been fragmented - small labs, niche journals, and deep philosophical divides. But the new wave of consciousness research emphasizes adversarial collaboration: opposing theorists jointly designing experiments to test each other’s claims. It’s a model borrowed from psychology and particle physics, where rival frameworks must meet common empirical ground.

This culture of openness extends beyond theory: shared datasets, pre-registered protocols, and citizen-science projects such as The Perception Census aim to map how subjective experience varies among individuals at scale. The next wave of consciousness studies should include more data, collaborate across disciplines and embrace new methods.

Emerging technologies promise to push consciousness research far beyond the lab. Extended reality - augmented and virtual environments combined with wearable brain imaging - allows scientists to study awareness in lifelike settings. Computational neurophenomenology, blending AI-driven modeling with human introspection, bridges subjective and objective accounts of experience.

Meanwhile, neurotech companies such as Neuralink, Kernel, and Synchron are developing brain-computer interfaces capable of reading and writing neural activity at unprecedented resolution, probing awareness/levels of consciousness in more contexts than ever.

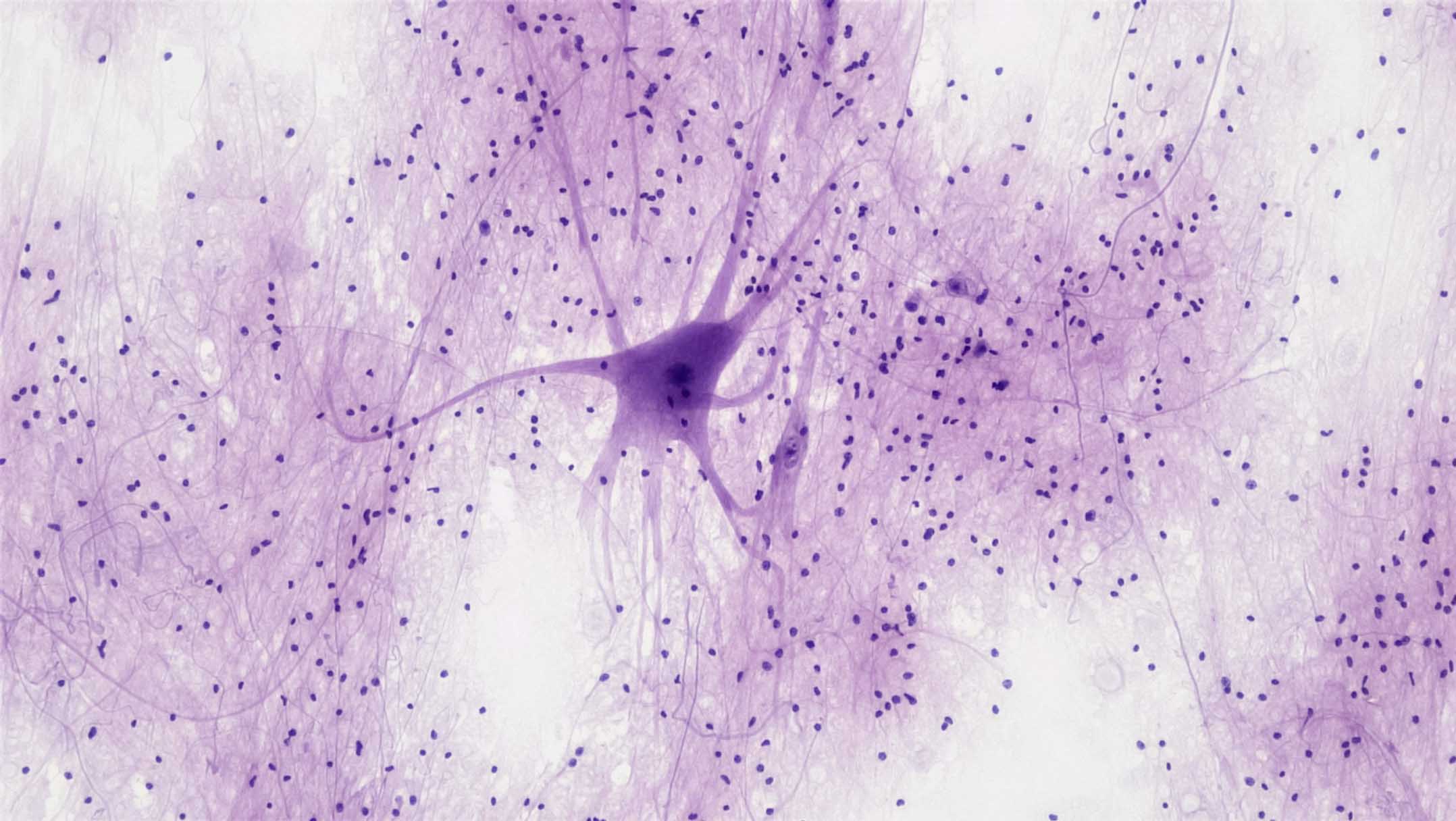

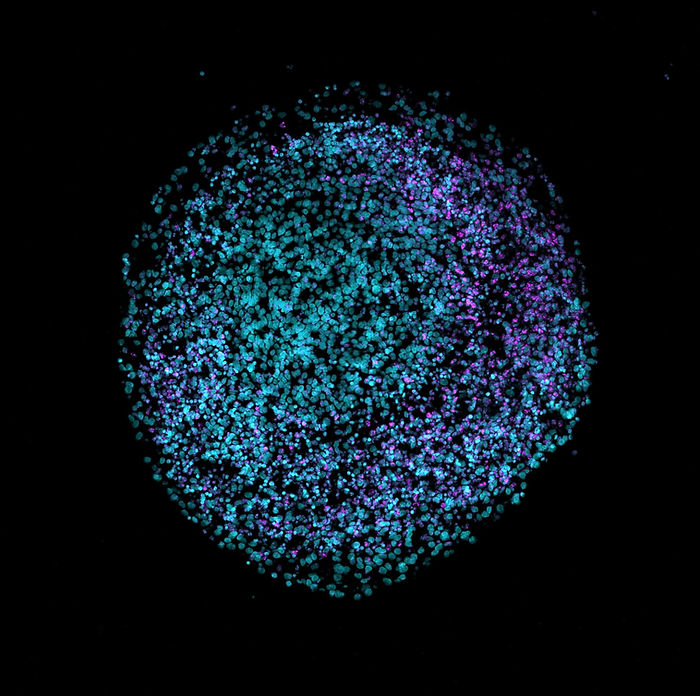

Advances in synthetic biology, such as brain organoids or Cortical Labs’ “DishBrain” system, seemingly echo brain function - neuronal development, learning and adaptivity to new tasks, even responsivity to drugs. The potential emergence of consciousness has serious implications for our use of these novel technologies as tools and models.

Another outcome of consciousness efforts will be the long-sought “test for consciousness” - a metric to determine whether a system, from an infant or coma patient to an AI or brain organoid, possesses subjective experience. Already, tools inspired by IIT such as the Perturbation Complexity Index are being used to detect hidden awareness in nonresponsive patients. However, finding a measure that could be applied across any system remains a coveted yet elusive goal.

A deeper understanding of consciousness would revolutionize medicine, offering new hope for coma recovery, dementia care, and mental health treatments targeting the subjective roots of anxiety or depression. In law, it could reshape definitions of responsibility and intent - challenging centuries-old notions of free will.

In ethics, it could clarify our obligations toward animals, AI systems, and even lab-grown organoids. The authors warn that as with DNA or atomic physics, the ability to manipulate consciousness will demand new moral frameworks as much as new tools.

Cleeremans, Mudrik, and Seth ultimately argue that “solving consciousness” may not mean a single grand revelation, but a steady refinement of theories, methods, and humility. Each step forward - whether a new neural signature, an AI benchmark, or a patient regaining awareness - redefines what it means to know ourselves.

In the coming decade, neurotechnology and consciousness science may converge into a field as transformative as genetics was for biology. The companies and researchers who bridge subjective experience and objective data will not only decode the brain - they may illuminate the essence of being itself.

Further Reading: